When I was in high school, I was browsing through the sci-fi section of a local book fair when I came across two books that sounded right up my alley. I still remember the first sentence of the blurb: “In one cataclysmic moment, millions around the globe disappear.”

The series was called “Left Behind”.

I didn’t make it past the first hundred pages. The authors had this weird need to add every character’s relation to religion into their introduction, and it bothered me. That was fine. My bookshelves are packed with mediocre speculative fiction that I’ve opened only to close a half an hour later.

It was only when I mentioned them to my parents that I learned that these weren’t just badly written sci-fi. The Left Behind series was the evangelical christian equivalent of Harry Potter, a massively bestselling book series telling a story that was as much prediction as fiction. I gave them a quick google, went “huh, interesting”, and moved on with my life.

I didn’t think about the Left Behind series again until 2014, when YouTube recommended to me that I watch the trailer for… Left Behind, the movie? Starring NICOLAS CAGE???

What followed was a years-long dive down the rabbit hole that is the Christian Entertainment Industry, culminating in the post you’re reading today.

A few minutes after I watched that trailer, I learned that not only was this movie not a fever dream spawned by an unholy combination of final exams, too much Fox News, and an ill-advised drunken viewing of The Wicker Man, it wasn’t even the first Left Behind adaptation on film. There was a trilogy from the early 2000s, covering the first three books of the sixteen-part series. They had been created by a company called Cloud Ten Pictures, which, according to Wikipedia, specialized in Christian end-of-the-world films.

But for a company to specialize something implies that there are other companies that don’t. This in turn, led me to the many, many corporations over the years dedicated to creating literature, music, and of course, film, exclusively by and for the Evangelical Christian movement.

I want to begin by clarifying that I have not seen most of the movies these studios put out. There are hundreds, perhaps thousands of them, and especially with the oldest ones they can be pretty hard to find. But I have seen most of the ones which are on YouTube, which it turns out is a lot of them. And I see three eras, as distinct in tone and style as the talkies were from silent pictures are from the technicolor blockbuster.

1951-1996: Prehistory

Like most movements, you can pick almost any date you like for when the Christian Media Boom began, but I place its foundations on October 2nd, 1951. That’s when evangelical minister Billy Graham’s brand new production company, World Wide Pictures, released Mr. Texas, which Wikipedia tells me was the first Christian Western film in history. I wasn’t able to find a copy of Mr. Texas, but I did find a copy of the second Christian Western ever made, a flick called Oiltown, USA. It’s a classic romp about a greedy atheist oil baron who is guided to The Light by a good-hearted family man named Jim and a cameo by Billy Graham himself. It was made in 1953, and it shows, right down to the black housekeeper whose dialect is two steps removed from “massa Manning”. It takes a ten minute break near the end to show us an unabridged Graham sermon, in its entirety. And I have to tell you, that on its own makes the whole 71 minutes worth watching. He delivers his soliloquy with what I can only describe as a charismatic monotone. The same furious, indignant schoolmaster chastises me with “When Jesus Christ was hanging on the cross, I want you to see him, I want to see the nails in his hands, I want you to see the spike through his feet, I want you to see the crown of thorns upon his brow!” and angrily comforts me that “Christ forgives and forgets our past!” It’s the funniest thing I’ve seen in ages, and yet it’s so earnest, you almost can’t help but be moved.

There’s actually a lot of room for thematic discourse on Oiltown, despite it having fallen so deep into obscurity it’s got a total of one review on IMDb. Halfway through, there is a scene where Les, the oil baron, pulls a gun on one of his employees in a scuffle, before being disarmed by Jim, the Right Proper Christian of the show. The gun, which since “The Maltese Falcon” has been used as a stand-in for the male phallus as a symbol of masculine power that won’t get an NC-17 rating, is first brandished by Les. He is disarmed, or metaphorically castrated, by Jim. Jim engages in christian charity and returns Les’s manhood to him by placing it on the desk. Our Christ stand-in both gives and takes away power, in equal measure. “Christ forgives and forgets our past”, after all.

There’s also a weirdly anti-capitalist message buried in here, though it’s mostly post-textual. While it’s implied that Les is an adulterer and he clearly is capable of murder, the main sin we see in him is greed, manifested by a corporate desire to make money at the expense of a soul. “Here’s my god, two strong hands, a mind to direct them, and a few strong backs to do the dirty work!” he proclaims to Jim when they first meet. It’s unlikely a less christian movie could have gotten away with such progressive messaging in 1953, the same year Joe McCarthy got the chairmanship of the Senate Permanent Subcommittee on Investigations. But I digress.

More importantly, it sets up a few themes we will continue to see in christian film later on. The first, and most glaringly obvious, is the anvilicious message of “christians good, non-christians bad”. The only explicitly non-christian character in the movie, Les, is an adulterous cash-obsessed robber baron who gambles and blackmails and nearly commits murder. Contrast that with Jim and Katherine, the golden couple who can do no wrong, and you see a deeply manichean worldview: for all Billy Graham’s talk that “there is not enough goodness you can do to save yourself”, in his world, good works are the unique domain of the christian people.

Second, you should note that even back then, it’s not enough for Les to simply be an evil man. He is also actively hostile to the church. He sees it as nonsense that he must protect his daughter from at any cost, and says as much to Jim. In the context of the story, that makes sense. This is a Beauty and the Beast tale, with Les as the Beast and Billy Graham as a strangely-cast Belle. For his arc to be how he becomes a good person through Christ, he has to begin at a point of maximal disbelief. But it leaves no room for the quiet disbelief or genuine faith in other religions embodied by most non-christians and even christian non-evangelicals. Sadly, that’s the beginning of a long trend.

World Wide Pictures continued to pump out movies for decades after those first hits. Most of them are rehashes of the same ground broken by Oiltown, USA: a man goes through some rough ordeals and is ultimately saved by God and Graham.

Billy Graham’s outlet stayed the same, but the world around him changed. His brand of evangelicalism was strictly apolitical, and he had cozy relationships with democratic and republican presidents alike. He happily fought for anti-sodomy laws and a swift response to the AIDS crisis in equal measure. His brand was driven by gospel, not republicanism.

That strain of evangelical christianity died with Roe v. Wade, and the cultural upheavals of the 1960s and 70s. The dominance of traditional christian values had been under threat in America for years, but now, we had legalized what in the eyes of the church could only ever be baby murder. The response was a more political religious tradition, and the birth of modern Christian fundamentalism.

This tradition, while still hailing Graham as a forefather, owes just as much to Barry Goldwater and Ronald Reagan for its existence. It believed, and believes to this day, in young- or old-Earth creationism as a valid scientific theory that should be taught alongside evolution in public schools. It rejects the notion of the separation of church and state, arguing that the universally-christian founding fathers formed this country as a christian nation and discounting the words of Thomas Jefferson and the first amendment alike. It rejects the liberal attitudes toward sex that had become popular in the 60s, and perceives promiscuity, homosexuality, and “sexual deviancy” writ large as threats to the very fabric of society. And that’s just the tip of the iceberg.

As of 2017, 80% of evangelical christians subscribe to a religious theory called dispensationalism. Among its tenets is the belief that the second coming of Jesus Christ is tied to the establishment of the state of Israel, and that jews, all jews, must live there before Christ can return to end the world and sort all remaining souls into heaven or hell. What’s more, they believe that it is their duty to bring about that outcome as quickly as possible: they wish to be vindicated in their beliefs. This manifests in rabid support for Israel, and particularly for the Israeli right-wing and its West Bank settlers. Don’t mistake that for support for the Jewish people, however. More on that later.

It also left many of its members open to the worst impulses of the American right. There has always been a paranoid streak in our society, all the way back to the Alien and Sedition acts . Even Billy Graham himself railed against the creeping influence of communism. Under the leadership of his son, Franklin, and the former segregationist/Moral Majority founder Jerry Falwell, those impulses were magnified into widespread belief in nationalist, even white nationalist conspiracy theories. Many evangelicals genuinely fear the dawn of a New World Order, seeing the seeds of it in the globalist economy and the formation of the UN. They see the influence of “international bankers”, the creation of shared currencies like the euro, even the Catholic church as vectors for the Antichrist’s eventual rise to power.

The prehistoric era of the christian film industry ended in 1994, through the actions of a man named Patrick Matrisciana. Sixteen years before, he had created a christian production company called “Jeremiah Films”, which is still around today. As I’m writing this, its home page prominently displays a trailer for a “documentary” claiming that the Antichrist may be either an alien or a sentient artificial intelligence.

I will also note that its homepage redirects to its store.

Whatever diminished form it may take today, in 1994 the company, or at least its founder, had a far wider influence. With distribution help from Jerry Falwell, Matrisciana produced the documentary The Clinton Chronicles, ostensibly through a group called “Citizens for Honest Government”.

The film is available in its entirety on YouTube, complete with an opening informing us that “unauthorized duplication is a violation of federal law”. Beyond the standard and largely credible accusation of philandering on the part of Bill Clinton, it also claims that he and Hillary engaged in a widespread conspiracy to traffic cocaine through Mena Airport in Arkansas, partook in it themselves, sexually abused young girls, and murdered anyone who threatened to expose them. It relied heavily on the testimonials of a man named Larry Nichols, a disgruntled former Clinton employee who had been fired for malfeasance in 1987, when Bill was still governor of Arkansas. It also paid over $200,000 to various individuals to support his claims. While Matrisciani denied that Falwell had anything to do with the payments, that only leads me to wonder where the money came from instead.

According to the New York Times, about 300,000 copies of The Clinton Chronicles made it into circulation. The general audience for the conspiracies it introduced to the world numbered in the tens of millions.

At this point, the christian film industry had crossed the event horizon. The Clinton Chronicles was an undeniably secular, completely political film, paid for by church dollars with the intent to take down an opponent of the moral majority. And it had gone viral in ways that no prior production from the industry had, all the way back to the days of Oiltown and Mr. Texas. Not only had their message reached a far wider audience by humoring their most paranoid impulses, they had uncovered a way to make large sums of money doing so. And so, at long last, we reach the films that introduced me to this world of mysteries.

1996-2007: The Left Behind Era

The last years of the 20th century were a perfect breeding ground for paranoid conspiracies. The world was undergoing radical social change at a previously unimaginable pace, but more importantly it was uniting. This was the age of Fukuyama’s “The End of History”, when liberal democracy seemed the future across the globe. We saw the formal creation of the EU in 1992 and the Euro in 1999, saw phrases like “made in China” go from obscurity to ubiquity, saw the dawn of the internet in the public consciousness. And with the collapse of the Soviet Union, there was no longer an easy villain on which to foist our cultural ills.

With such societal upheavals comes a natural fear of the changes they bring. The same impulses which today fuel the nationalism of Donald Trump and populists across the globe stoked the fears of the Christian right in 1996. The idea of consolidating the whole of humanity under one financial and political roof meant no more America, the Christian nation. While I doubt many in the Christian right thought in those terms, there was very much an undercurrent of worry that one day you might wake up and not recognize your own country anymore.

Combine this with a religious fascination with the end of the world, and the already-established belief that this end, with its all-controlling Antichrist, was nigh, and you have a recipe for not just Clinton bashing but full-fledged devotion to conspiracy.

These conspiracies defined the second great epoch of the Christian Media Industry. Like the campy sermons of Billy Graham’s heyday, it centered around a single figure: The Left Behind series.

Left Behind started in 1995 as a book series by the minister/writer duo of the late Tim LaHaye and Jerry B. Jenkins. There are twelve books in the main series, plus three prequels, a sequel depicting life after the Second Coming, a spinoff series of children’s books, a Real-Time Strategy game a la Starcraft which gives you the option to play as the Antichrist but not to train women in roles other than nurse and singer, and of course, four movies.

Today, it has sold 65 million copies, more than Little House on the Prairie.

The plot is an exercise in Eschatology, the study of biblical accounts of the end of the world. The rapture happens in the first book, taking all true christians and all children from the earth. The remaining word count is devoted to the titular folks who were left behind as they struggle through the seven years of tribulation before the Second Coming of Christ. The Antichrist is Nicolae Carpathia, originally the Romanian President but who, with the initial backing of two “international bankers” soon becomes the UN Secretary-General and later the leader of a group called the Global Community. The Global Community is a totalitarian regime only one or two steps removed from the most fanatical ideas of the New World Order. It has a monopoly on the media through its news network, GNN, it kills anyone that gets in its way, and most importantly, it establishes a new global religion to supercede all others, including Christianity. At first, this religion is called “Enigma Babylon One World Faith”. No, I am not making that name up. Later on, when Carpathia is assassinated only to be resurrected and possessed by Satan himself, this is replaced by “Carpathianism”.

Earlier, I compared these novels to the Harry Potter series for the religious right, and while that comparison is apt in terms of magnitude, it is not accurate in terms of what it means to its audience. Harry Potter was nothing more or less than a work of fiction. It was well-written, easily accessible escapism.

To the religious right, Left Behind is more like The Hunger Games: a stylized representation of our own anxieties about the future. I doubt that any evangelicals believe that the antichrist will be named Nicolae Carpathia, or that a pilot named Rayford Steele and a journalist named Buck Williams will be the keys to our salvation. But as of 2013, 77% of evangelicals in the US believe we are living in the end times right now. For much of Left Behind‘s multimillion-strong audience, this is not theoretical.

I have not read much of the Left Behind series. My knowledge of its text and subtext is largely limited to the extensive and lovingly-written wikipedia summaries of each book. This is enough to tell me such critical details as the type of car Nicolae and the false prophet, Leon Fortunato, use to flee Christ when he finally returns in Glorious Appearing, or the fate of satanist lackey Viv Ivins (Get it? VIV IVIns, VI VI VI, 666?), but not enough for me to feel comfortable discussing their thematic undertones.

I have, however, seen the films. Created in the early 2000s by Cloud Ten Pictures, they weren’t even the first foray into christian armageddon by the studio. They were preceded by the four-part Apocalypse series, which covered the same ground but without the Left Behind label. Note that the first three Left Behind novels had already been published before Apocalypse: Caught in the Eye of the Storm was released, so these movies likely owe their existence as much to a rights dispute as to the artistic vision of its creators. As for the movies themselves, I cannot do the experience of watching them justice in words. You have to see this shit for yourself.

By 2000, however, Cloud Ten had worked out the kinks and were ready to move forward with their film. It, and both of its sequels, are currently available on YouTube. If you have the time, I encourage you to watch them. I don’t know how it feels to be a born-again christian watching them, but for someone outside the tribe, they are the epitome of “so bad, it’s good”.

That is, until you remember that millions of people out there are dead serious in their appreciation for this story. It departs the realm of the realistic only a few minutes in, when it portrays an immense squadron of what appear to be F-15 eagles invading Israeli airspace and attempting to carpet-bomb Jerusalem.

Whatever your opinions may be on Israel, the threats to its existence don’t take the form of American-made fighter jets flattening cities. Yet the reverent tone in Chaim Rosenzweig when he says “no one has more enemies than Israel” tells us that this sequence is not an over-the-top farce or spoof. This movie genuinely wants us to believe that this is what life is in Israel.

In three movies and 270 minutes of screen time, there is not one mention of Palestine or the Palestinian people. There are only passing references to what “The Arabs” might allow with regard to the Temple Mount.

Earlier, I cautioned you not to confuse the evangelical movement’s support for Israel with support for Jews. By the second movie, you begin to see why. The climax of the film centers around an announcement by rabbi Tsion Ben-Judah in Jerusalem, “the world’s most knowledgable and respected religious scholar”. This announcement is ultimately revealed to be that he now recognizes Jesus Christ as the messiah, in light of overwhelming biblical evidence. This image, of a renowned Jewish scholar renouncing his faith to support the ideals of born-again Christianity, is how LaHaye and Jenkins see the Jewish people: Christians in all but name, only a few short steps from embracing the True Light.

To be fair, in that regard the Left Behind series is an equal-opportunity offender. The dichotomy of Christians good, non-Christians bad first seen in Oiltown has reached its logical extreme here. Every person who is remotely reasonable or kind is either a born-again Christian or becomes one by the end of the series. The general dickishness of Les Manning has now become lip service to the literal Antichrist.

Perhaps the best evidence for this worldview is the depiction of the events immediately after the Rapture: as though freed by the absence of Evangelicals to release our inner beasts, those left behind immediately turn to violence and looting. A main character’s car gets stolen off the freeway (what happened to this looter’s car? did he just leave it on the interstate?), Martial law is declared within 12 hours, the world falls into chaos to an almost comical degree. Some of this is real, in that if hundreds of millions of people disappeared in an instant there would be vast upheavals, but humanity’s experience with natural disasters has told us that there are usually as many good samaritans as there are opportunists.

Not so in Left Behind. Recall what Billy Graham said 47 years before: “there is not enough goodness you can do to save yourself”. The good samaritans were all raptured, because all the good samaritans were Christian.

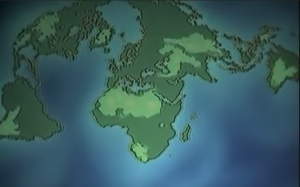

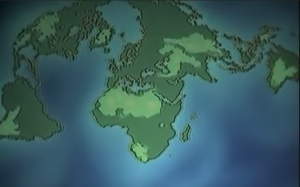

These films, like The Clinton Chronicles, openly embrace the paranoid impulses of their audience. The Antichrist rises to power through the influence of the global financial elite, manipulating and contorting the UN into a vehicle for his ambitions. This is explicitly tied to the drive to unify the world under one government, one language, one currency, and one culture. When Carpathia reveals his plan for world domination in the final moments of the film, the world map he uses to display it is not the Mercator projection that had been used in public schools for a century. It didn’t use the newer, Robinson projection that the National Geographic Society would happily have given them for free.

Instead, viewers are greeted with the obscure and rarely-used Azimuthal equidistant projection, which uses the north pole as the center and distorts the southern hemisphere to the point that Antarctica looks like a JPEG artifact. It has neither fidelity of shape nor area, and to the best of my knowledge has only one common use:

It’s the flag of the United Nations.

With all that said, it’s also undeniable that the Left Behind movies did not have the same pull as that of the books. The first film barely made back its budget, and the sequels didn’t even get a theatrical release. In fact, the production quality was so bad Tim LaHaye sued Cloud Ten Pictures claiming breach of contract. Left Behind 3: World at War came out in 2005, the last Left Behind Novel came out in 2007, and after that the Christian right largely moved on. The google trends data for the series is a slow, petering death.

The Dark Ages: 2007-2014

After Left Behind, the Christian Media Industry underwent its own 7 year tribulation. The circumstances of the world around them remained ideal for this conspiratorial mindset. If anything, they were improving. The 2008 recession had taken railing against international banks from the realm of antisemitic claptrap into the mainstream, as Americans watched their tax dollars funneled into wall street bailouts. And the christian right now had a black, democratic president who had spent time in a muslim country in his youth. And indeed, the birther hoax, along with the less common but still widespread belief that Obama himself was the Antichrist, emerged at this time.

Even these environmental shifts, however, could not overwhelm the pressure of demographics. My generation is perhaps the least religious in American history, and as the first millenials entered adulthood, evangelical membership began to plummet.

It wasn’t just a matter of religion, though: the Christian right had built its rhetoric around certain fundamental issues, most notably opposition to gay marriage. That made sense in 2001, when that view was shared by 57% of the electorate, but that majority was dissolving rapidly and with no end in sight. Their issue profile didn’t just not appeal to the future of America, it actively turned them away.

In 2011, 87% of evangelical ministers felt their movement was losing ground. It was the highest number from any church leaders in any country.

The Christian right needed to rebrand, and fast. It needed to completely overhaul its message in a way that spoke the language of Millenials with its emphasis on equal treatment of minority groups, without alienating its traditional congregation who still cared deeply about traditional values issues like abortion and gay rights.

From that effort came the greatest triumph of the entire industry, a film that catapulted it out of irrelevance and into the national spotlight. It has been the elephant in the room since I began this piece. It’s time to discuss God’s Not Dead.

The Second Coming: 2014-Present

God’s Not Dead exploded onto the national scene like a bomb. While relatively small by the standards of mainstream Hollywood, its nearly $65 million box office take makes it the most successful film out of the Christian film industry by a factor of two. It earned more in its opening weekend than the gross of all Left Behind movies made up to that point combined. It even came out seven months before a Left Behind remake, the Nicolas Cage vehicle that was meant to bring more professionalism to the series. Its box office take ended up at less than half that of God’s Not Dead, despite opening at more than twice as many theaters. This was the new face of Christian Media.

Especially in comparison to the apocalyptic bombast of their predecessors, the God’s Not Dead series is deeply personal. Each movie is, at its heart, a courtroom drama, depicting a case in which God is, metaphorically, on trial. In the first, it’s a university classroom where a professor is forcing a student to sign a pledge that God is dead. In the second, it’s a literal court case, concerning alleged misconduct by a teacher bringing Jesus into the classroom. In the third… We’ll get to that later.

This innovation makes them vastly superior vehicles to their predecessors. I have never been forced to sign a pledge that God is Dead to pass a college course, nor has any student in American history, but we have all encountered a pretentious professor with a political axe to grind. They appeal to certain universal experiences which are inherently more relatable than The Antichrist.

That is not to say that these movies are good. The first one, for instance, tries to go for a Love, Actually-style story featuring several disconnected narratives that are ultimately united by a common theme, but their choice of narratives to tell dooms it from the start. Love, Actually succeeded because each story was roughly balanced in terms of the stakes involved, and there really was no “main plot”. Neither is true in these movies, and the result is that you spend a lot of your viewing time wondering why you’re watching the travails of these two preachers trying to rent a car to go to Disney World and when you’ll get back to the interesting part in the classroom.

Thematically, however, they are fascinating in ways that none of their predecessors are, because these don’t just show a heroic struggle of good vs. evil or serve as vehicles for a sermon: they show atheists making their case, as the Evangelical Christian movement hears it. It requires a more detailed analysis, so we’re going to discuss them in detail, as individual movies. Well, the first two at least. The third was a box office flop and isn’t even available on PureFlix’s website anymore.

God’s Not Dead (2014):

Just to make sure we’re all on the same page, here’s a brief summary: at an unnamed university, freshman and devout Christian Josh Wheaton signs up for a philosophy class led by the militant atheist, Professor Radisson. Radisson demands all his students begin the class by signing a pledge that God is Dead. Josh refuses, Radisson tells him that if he doesn’t sign he will have to prove God exists to the class, and Josh agrees. There are several other subplots, most of which are there to show how horrible non-christians are, including:

- Amy, a liberal gotcha-journalist with an “I <3 Evolution” bumper sticker on her car, who gets cancer and is dumped by her boyfriend when he finds out (“I think I have cancer”, “this couldn’t wait until tomorrow?”), then converts when she realizes she is alone in the world.

- A Muslim student named Ayisha who has secretly converted to Christianity thanks to Franklin Graham (son of Christian Film’s progenitor, Billy) sermons on her ipod, who is then disowned by her father when he finds out.

- Professor Radisson’s abused Christian girlfriend, Mina, and her struggles with their relationship and the care of her senile mother.

- Pastor Dave, played depressingly straight by one of Pure Flix’s founders, trying and failing to get to Disney World.

After a long debate, Josh persuades the class that God’s not dead, and the characters from all these plots wind up together at a concert by Christian pop-rock group The Newsboys. Radisson gets hit by a car outside, but as he is dying, Pastor Dave persuades him to convert to Christianity, saving his soul. Before the credits, they scroll through a long list of real-world court cases where Christians have allegedly been persecuted in the US, on which this film is based.

There are many cringeworthy moments, from the Good Christian Girl approaching Ayisha as she’s putting her headscarf back on and telling her “you’re beautiful… I wish you didn’t have to do that”, to Pastor Dave’s missionary friend looking to the sky and saying “what happened here tonight is a cause for celebration” while standing over Professor Radisson’s corpse, but those aren’t good for more than sad laughter.

For all its bigotry, and there is plenty of barely-disguised islamophobia in this film, it succeeds because of its small scale. Instead of having a grand conspiracy from the Arabs to control the world, we have a single abusive father who cannot respect his daughter’s choice. Instead of the literal Antichrist, we have one overbearing professor. The adversaries are on a human scale, and therefore feel more believable.

That believability only makes them more pernicious. The underlying assumption, that all non-Christians are evil and all Christians are good, still holds. It’s just harder to dismiss now that these characters are within our experiences of the world as we know it. The muslim father is physically abusive, beating Ayisha and throwing her out of his house when he finds out she’s converted. Amy’s boyfriend literally dumps her because he doesn’t think it’s fair to him that she has cancer (“you’re breaking our deal!”, he exclaims). Professor Radisson’s friends and colleagues are snobbish, pretentious, and demeaning to Mina over everything from her wine handling to her inability to understand Ancient Greek, which, to be fair, is an accurate depiction of most English majors including myself.

And then, of course, there is Radisson. Unlike the other characters, the writers at least try to give him some depth and development. He is an atheist because as a child, he prayed to God to save his dying mother and it didn’t work. The experience left him a bitter, angry shell of a philosopher who, deep down, still believes in the Christian deity. He just despises him. In the climax, Josh berates his professor with the repeated question, “why do you hate God?” and Radisson finally answers “Because he took everything from me!” This prompts Josh to lay down the killing blow: “How can you hate someone if they don’t exist?”

Score one for the Christians.

For a movie billed as “putting God on trial”, God’s Not Dead spends very little time engaging with the arguments. Radisson only ever makes two points with his screen time: he argues that Steven Hawking recently said that there is no need for God in the creation of the universe, and he argues that God is immoral because of The Holocaust, Tsunamis, the AIDS crisis, etc. More shocking, however, is how little time gets devoted to Josh’s arguments /for/ God. We see a brief animation about the big bang and some vague discussion of Aristotle and the steady-state cosmological theory, we see the argument that evolution of complex life happened very quickly when compared to how long only single-celled organisms existed, and we see the argument that without moral absolutes provided by God, there can be no morality. All told, it’s only about 20 minutes of screen time.

Each of these arguments is deeply flawed. While Aristotle did believe the universe was eternal, Maimonedes strongly disagreed with his conclusions, as did Thomas Aquinas. It’s unfair to categorize science as being united in support of the theory. Instead, it’s a perfect example of the scientific method at work: a question was asked, it was debated by countless minds until we developed the technology to test it, and we found our answer. Modern-day forms of animal life did emerge only very recently when compared to the history of all life on Earth, but not only did that process still take millions of years, it also reveals a flaw only in the theory of Evolution as put forward by Darwin centuries ago. Since his time, we’ve learned that evolution likely functions in what’s called a punctuated equilibrium, where evolution stagnates until systemic environmental changes force the life web to adapt. Hell, even Darwin wrote “Species of different genera and classes have not changed at the same rate, or in the same degree.” And the way Josh dismisses the entire field of moral philosophy with a wave of his hand is borderline offensive. Suffice it to say, there are plenty of ethical frameworks that have no need for a God whatsoever, many of which have existed for thousands of years. I’m partial to Rawls’s Veil of Ignorance, to name one.

Nor is Josh the only one committing egregious crimes against the good name of reason. Radisson’s Hawking non sequitur is a complete appeal to authority: “Hawking says there is no need for a God, so God doesn’t exist”. It’s a piss-poor argument, and Josh is entirely in the right when he shuts it down a few scenes later. And as offensive as Josh’s dismissal of moral philosophy was to me, I must imagine Radisson’s appeal to injustice would feel much the same to religious viewers. The question of human suffering in a God-given world is called Theodicy, and it’s as old as monotheism itself. Every faith has an answer for it, which is why so many of the devout are converted in times of intense suffering. If you’re unfamiliar with this subject, check out The Book of Job.

While both are flawed, Radisson’s are unquestionably worse. Josh’s arguments are the same kind of nonsense you will find on the christian right from places like PragerU. Josh even cites major evangelical apologists like Lee Strobel in his presentations. His failures reflect real deficiencies in American Evangelical discourse when compared to the breadth of Christian theology, and it’s understandable that a college freshman might not be up to date on Contractualism or the Categorical Imperative.

Radisson’s, by contrast, bear little resemblance to the anti-God arguments you might hear from an atheist professor. He uses some antitheist buzzwords like “Celestial Dictator”, a favorite of the late Christopher Hitchens, but there is no engagement with what Hitchens meant by that phrase. “Celestial Dictatorship” refers to the fact that many traits Christians see in God, that he knows everything, is all powerful, and judges you not just by your actions on Earth but by the intent in your mind to do good or ill, we see as tyrannical despotism in human beings. It’s a nuanced point that you can debate either way. Radisson uses it as a cheap insult. If this were your only experience with atheists, you would think they were all morons.

Some of this is malice, to be sure. It’s impossible to watch this movie and not see the anger the creators feel towards atheists. But there is also apathy here. Once Josh and Radisson agree on the terms of their debate, the professor moves on to assign the class their reading for the week. Two works: Descartes’s “Discourse on the Method”, and Hume’s “The Problems of Induction”.

Hume never wrote a piece with that title. In fact, while he talked at great length about that topic, as I write this, The first result on Google for “The Problems of Induction” tells us that Hume never even used the word “Induction” in his writings. They could have pulled a list off his works off Wikipedia and gone with any title on it and no one would have batted an eye. But they couldn’t be bothered. Instead, they made one up, based on some vague recollection of the topics Hume covered. The movie doesn’t understand the arguments against the existence of God. It doesn’t really understand the arguments for Him. And it doesn’t care to try, because that’s not its purpose.

Its purpose is spelled out in the list of lawsuits it shows you during the end credits. Most of them have nothing to do with the existence of God. Most of them are either about the right to discriminate against LGBT people at universities or misconduct from pro-life groups. I’ve taken screenshots of the list, feel free to peruse them.

You should also note that most of these cases were brought forward by a group called “Alliance Defending Freedom“. The Southern Poverty Law Center classifies them as an anti-LGBT hate-group, and their file on the group is a haunting look into the worst of Christian fundamentalism.

The ADF is the legal wing of the far-right Christian movement, and has been instrumental in pushing the narrative that anti-LGBT-discrimination laws impinge on Christians’ First Amendment rights.

That is the underlying message of this film. It has nothing to do with the existence of God. If it did, it would have devoted more time to the debate. It wants you to believe that in this country, Evangelical Christians are a persecuted minority, spat upon by muslims, atheists, and the academy at large.

And it is their fundamental right, granted to them by God and the First Amendment, to discriminate against The Gay Agenda.

God’s Not Dead 2 (2016)

Cinematically, the sequel is in many ways an improvement over the original. It ditches the first film’s “Christ, Actually” style in favor of a single, unified narrative. While the first movie’s cast of characters (with the notable exception of Josh Wheaton) do show up, their storylines are either much shorter or directly related to the core plot.

They have also changed venues, from the metaphorical trial of a college classroom to the literal trial of a courtroom. A christian teacher, played by 90’s-Nickelodeon-sitcom star Melissa Joan Hart, is discussing nonviolent protest and Martin Luther King when a student asks her if that’s similar to the teachings of Jesus Christ, and she answers with a few choice quotes from scripture. This lands her in hot water with the school board, and rather than apologize for her statement, she stands by her beliefs. The school board brings in the ACLU (“they’ve been waiting for a case like this”), and tries to not only fire her, but revoke her teacher’s license as well. The trial soon expands to cover whether Jesus the man was a myth, subpoenas of local preacher’s sermons, even a case of appendicitis. In the end, the judge finds for her, and the Newsboys play us out with a new song.

I want to begin by making something very clear: the Christians are in the right here. While religion has no place in a science classroom, I can’t imagine any way to teach AP US History without mentioning Jesus. Martin Luther King was a reverend, and his biblical inspiration is an established historical truth.

Also, Jesus existed. Even if you throw the entire bible out, both Roman and Jewish scholars refer to him long before Christianity as a religion took off. That doesn’t necessarily mean that he was the Messiah, or even that most of the stories told about him actually happened. But the near-universal consensus of historians and theologians across the globe is that a jewish rabbi named Jesus Christ was born in Nazareth and crucified on the orders of Pontius Pilate.

This film’s problem is not its inaccuracy or ignorance: it is its slander. I actually spoke with a friend of mine who works at the ACLU about a hypothetical case like the one in the movie. He told me they would absolutely weigh in- on the side of the teacher. The American Civil Liberties Union’s official position is that, while proselytizing from teachers is unacceptable, mentions of scripture in context are fair game, as are nearly all religious practices by students, on- or off-campus.

Each God’s Not Dead film ends with a new list of Alliance Defending Freedom cases allegedly depicting infringements on religious freedom. In several of the ones I saw in this film, the ACLU wrote briefs supporting the ADF’s position. You can see them on their website. They’re not just lying about the foremost advocate for Civil Liberties in this country, they’re stabbing their own former allies in the back.

There is a reason why the filmmakers made this particular organization the villain of this story. And it’s not just because the ACLU is the front line of the legal opposition to their pro-discrimination interpretation of the First Amendment. It goes back nearly a hundred years, to another trial over religion in the classroom: The Scopes Trial.

In March of 1925, Tennessee passed the Butler Act, a law banning the teaching of Evolution in public schools. The ACLU, then only five years old, offered to defend any teacher arrested for violating the bill. A man named John Scopes took them up on it, and in July of that year was taken to court for teaching Darwin in biology class. The Scopes Monkey Trial, as it came to be known, quickly became a widespread national story, and the town of Dayton (population 1,701) played host to the finest prosecutors, defense lawyers, scientists, religious scholars, and reporters in the world. The proceedings themselves were a madhouse, and to my knowledge are the only time a lawyer for the prosecution has been subpoena’d as an expert witness for the defense. Some day, I’ll write another post on the events of the trial, but until then I recommend you read the play Inherit the Wind or watch the 1960 film. Both are based on the actual events of the trial.

Scopes was found guilty, and while he won the appeal on a technicality, the Tennessee Supreme Court declined to say the law constituted an establishment of religion. Indeed, it wasn’t until 1968 that the Supreme Court of the United States ruled that such bans violated the establishment clause. But the trial still stands as the beginning of the end of Creationism in public classrooms. Some of that is warranted: the testimony of prosecution counsel, legendary orator, religious scholar, and three-time Democratic presidential candidate William Jennings Bryan was one of the great debates on the nature of skepticism and the historicity of testament of all time. It’s also one that Bryan lost, badly. The legal victory has done nothing to heal the wounds that defeat inflicted on the creationist cause.

That is the underlying purpose of this story: to relitigate the Scopes trial. It even has a climax featuring an unorthodox move by the defense, when the lawyer calls his own client, the teacher, to the stand as a hostile witness. During that testimony, he harangues her into tearfully admitting that she feels she has a personal relationship with God, and in a masterful display of reverse-psychology uses that to appeal to the Jury that they should destroy her.

In Inherit the Wind‘s version of Bryan’s testimony, their renamed version of him eventually claims that God has spoken to him that Darwin’s book is wrong. The defense lawyer mocks him for this answer, declaring him “the prophet Brady!” Same scene, different day.

Their fixation on this trial doesn’t just hurt them by forcing them to slander the ACLU to keep the same opposition in the courtroom: they also overlook a much more interesting legal plotline. During the trial, the school’s lawyers subpoena documents from local preachers, including all their sermons. This actually happened, and whatever your opinion on LGBT protection laws, it should make you feel a little queasy. The pastors had a legitimate case that subpoena power over the pulpit could be abused to prevent the free exercise of religion, even as their opponents had a case that public figure’s speeches advocating for political ends should be admitted to the public record. It’s a complicated issue which sets the First and Fourteenth Amendments in direct conflict with one another, and could make for a truly thrilling drama. In God’s Not Dead 2, it’s just a footnote. Another way to show the ACLU is evil.

Forget that the ACLU actually opposed the sermon subpoenas.

There are many other carry-overs from the original film. It is if anything even more hostile to Atheists than its predecessor. One of the subplots follows the child who asked the question about Martin Luther King and Jesus in the first place. She has recently lost a brother, which has driven her to Christ in secret. Her parents are proud skeptics, and are offended by what they see as her teacher proselytizing in class. They are also completely apathetic to the death of their other child. At least Radisson showed some small semblances of caring for his fellow Man.

Once again, the only plotline featuring nonwhite characters also features a physically abusive father. In lieu of Ayisha, they turn to Martin, a Chinese student who had a minor role in the first film who converts to Christianity and is disowned by his father for it. I am more sympathetic to this story than the last, since anti-Christian oppression is a real and well-documented problem in China, but the lack of other nonwhite characters (besides Pastor Dave’s friend, who has done nothing in two movies) makes the choice stick out all the same.

And it has no problem giving a truly heinous interpretation of our Constitution. The defense lawyer, Tom Endler, begins his case by (correctly) pointing out that the phrase “separation of church and state” does not appear in our Constitution. Instead, it came from a letter to the Danbury Baptists, assuring them that the government would not interfere with their right to worship. Endler then claims that this has been twisted in today’s times to mean that all religion must be excluded from the public sphere. This reflects an interpretation of the Establishment clause shared by much of the Christian right called “Accommodationism”.

Essentially, the accommodationist perspective argues that the Establishment Clause should be narrowly read to cover only formal establishment of a state religion, not that it should maintain no religious preferences. They argue that The United States was established as a Christian Nation, though not of any one denomination, and that laws which reflect that ought not be struck down. In particular, they see a role for Religion, in general in the public legal sphere: that it “combines an objective, nonarbitrary basis for public morality with respect for the dignity and autonomy of each individual”. And there are some compelling arguments for the theory. Yet it rests on a tortured reading of the Founding Fathers’ discourse before and after the Constitution was ratified.

They were clear, for instance, that the protections of the First Amendment did not only apply to Christians. George Washington, writing to the nation’s first Jewish congregation the year before the First Amendment was ratified:

“It is now no more that toleration is spoken of, as if it were by the indulgence of one class of people, that another enjoyed the exercise of their inherent natural rights. For happily the Government of the United States, which gives to bigotry no sanction, to persecution no assistance requires only that they who live under its protection should demean themselves as good citizens, in giving it on all occasions their effectual support.”

Nor was Washington the only one who extended these protections to Jews. In Thomas Jefferson’s autobiography, he wrote of a proposed addition of “Jesus Christ” to the preamble of his Virginia Statute of Religious Freedom, a predecessor to the First Amendment. It was defeated soundly, and the bill was passed without the reference. Jefferson writes: “they meant to comprehend, within the mantle of it’s protection, the Jew and the Gentile, the Christian and Mahometan, the Hindoo and infidel of every denomination”.

More specifically, on the subject of Jewish schoolchildren being taught the King James Bible, he wrote:”I have thought it a cruel addition to the wrongs which that injured sect have suffered that their youths should be excluded from the instructions in science afforded to all others in our public seminaries by imposing on them a course of theological reading which their consciences do not permit them to pursue”.

Clearly a man who felt that biblical teachings like those mandated in the Scopes Trial were allowed under the Establishment Clause.

As for the argument that Religion provides a nonarbitrary basis for public morality, that same Virginia Statute: “That our civil rights have no dependence on our religious opinions any more than our opinions in physics or geometry”.

That’s not to say that Accommodationism is a wholly bankrupt ideology. It’s all well and good to say that our Government cannot prefer one religion over another, but there are plenty of cases in which a full commitment to the Establishment Cause would constitute a de-facto violation of Free Exercise. But the version espoused by the creators of God’s Not Dead 2 is not interested in those grey areas. They believe that the United States was established first and foremost as a Christian Nation, and that our laws should reflect that supposed truth.

Or, in their words: “Unfortunately, in this day and age, people seem to forget that the most basic human right of all is the right to know Jesus.”

Where We Stand Today

God’s Not Dead: A Light in Darkness, the third installment of the series, aired in March of last year. It couldn’t even make back its budget, grossing less overall than either of its predecessors did on their opening weekends. Yet the Christian Film Industry lives on, with hundreds of movies available on PureFlix and more coming out every year.

Don’t mistake this for market domination. Even within conservative Christian circles, total interest in these films has never managed to eclipse that of Rush Limbaugh alone. The conservative media sphere is vast, and even God’s Not Dead was dwarfed by players like Fox News.

But that doesn’t mean it is meaningless. Even the third film of the series reached more people than the average readership of the National Review, long seen as the premier intellectual forum of the conservative movement. And unlike Fox News, these movies function largely invisibly: you won’t find them on Netflix, but to the target demographic, they’re sorted by subcategory (“apocalypse” and “patriotic”, to name a few) on its Christian doppelgänger. Their message is being heard loud and clear.

And that message is toxic. The world on display in God’s Not Dead, Left Behind, and all their knock-offs and contemporaries is one unrecognizable to those of us on the outside. It is a world divided into Christians and evildoers, where nonbelievers are at best abusive fathers and at worst servants of the Antichrist. It is a world in which the majority religion of the United States is subject to increasing victimization and oppression on the part of an Atheist elite hellbent on destroying God. It is one where powerful institutions for the public good, the ACLU, the UN, even our high schools and universities have been bent to their will. It is one that tells you that your identity is under attack, and that you must act to defend it.

Whatever they may have been in 1951, today these movies are not sermons on God. They are political propaganda. They are a carefully-crafted cocktail of Christian trappings and conspiracy theories, designed to make its viewers see Muslims as hateful child-beaters, Atheists as amoral oppressors, and the fundamental tenets of liberalism and pluralism as attacks on their faith.

It doesn’t have to be this way. The same year that gave us God’s Not Dead gave us Noah, a biblical story that grossed six times as much and got far better reviews. And even in these films, there are moments of real honesty and insight. In the first Left Behind film, there’s a scene in a church where everyone in the congregation has been raptured, except for the Preacher. We get to watch him, alone with the symbols of his God, processing his loss and what that meant about his faith. It’s a little over-the-top, but it also forced me to put my phone down and think about the ethics of what I was watching. You wind up pondering consequentialism and virtue ethics, wondering why his role in his congregation’s salvation doesn’t count, whether it should if his motives were corrupt, all while watching real grief from a man wrestling with his faith.

Maybe I’m just a sucker for climactic scenes where men call out God in the middle of empty churches. But I think there’s more to it than that. There are good moments, lots of them, in these films. If they wanted to, these directors and actors and producers could force their audiences to confront their own assumptions, to strengthen their faith through genuine interrogation. They could give us a catechism for the modern, more uncertain age.

They can. They just choose not to.